Understanding the node.js event loop

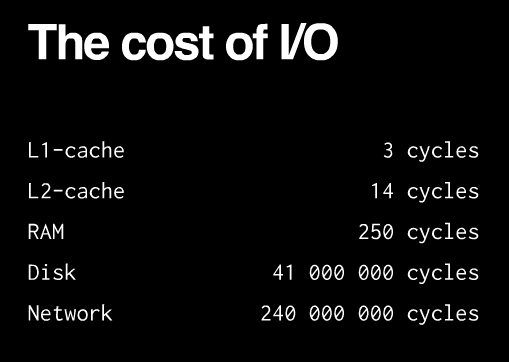

The first basic thesis of node.js is that I/O is expensive:

So the largest waste with current programming technologies comes from waiting for I/O to complete. There are several ways in which one can deal with the performance impact (from Sam Rushing):

- synchronous: you handle one request at a time, each in turn. pros: simple cons: any one request can hold up all the other requests

- fork a new process: you start a new process to handle each request. pros: easy cons: does not scale well, hundreds of connections means hundreds of processes. fork() is the Unix programmer's hammer. Because it's available, every problem looks like a nail. It's usually overkill

- threads: start a new thread to handle each request. pros: easy, and kinder to the kernel than using fork, since threads usually have much less overhead cons: your machine may not have threads, and threaded programming can get very complicated very fast, with worries about controlling access to shared resources.

The second basis thesis is that thread-per-connection is memory-expensive: [e.g. that graph everyone showns about Apache sucking up memory compared to Nginx]

Apache is multithreaded: it spawns a thread per request (or process, it depends on the conf). You can see how that overhead eats up memory as the number of concurrent connections increases and more threads are needed to serve multiple simulataneous clients. Nginx and Node.js are not multithreaded, because threads and processes carry a heavy memory cost. They are single-threaded, but event-based. This eliminates the overhead created by thousands of threads/processes by handling many connections in a single thread.

Node.js keeps a single thread for your code...

It really is a single thread running: you can't do any parallel code execution; doing a "sleep" for example will block the server for one second:js

while(new Date().getTime() < now + 1000) {

// do nothing

}

So while that code is running, node.js will not respond to any other requests from clients, since it only has one thread for executing your code. Or if you would have some CPU -intensive code, say, for resizing images, that would still block all other requests.

...however, everything runs in parallel except your code

There is no way of making code run in parallel within a single request. However, all I/O is evented and asynchronous, so the following won't block the server: [codesyntax lang="javascript"]

c.query(

'SELECT SLEEP(20);',

function (err, results, fields) {

if (err) {

throw err;

}

res.writeHead(200, {'Content-Type': 'text/html'});

res.end('<html><head><title>Hello</title></head><body><h1>Return from async DB query</h1></body></html>');

c.end();

}

);If you do that in one request, other requests can be processed just fine while the database is running it's sleep.

Why is this good? When do we go from sync to async/parallel execution?

Having synchronous execution is good, because it simplifies writing code (compared to threads, where concurrency issues have a tendency to result in WTFs).In node.js, you aren't supposed to worry about what happens in the backend: just use callbacks when you are doing I/O; and you are guaranteed that your code is never interrupted and that doing I/O will not block other requests without having to incur the costs of thread/process per request (e.g. memory overhead in Apache).

Having asynchronous I/O is good, because I/O is more expensive than most code and we should be doing something better than just waiting for I/O.

An event loop is "an entity that handles and processes external events and converts them into callback invocations". So I/O calls are the points at which Node.js can switch from one request to another. At an I/O call, your code saves the callback and returns control to the node.js runtime environment. The callback will be called later when the data actually is available.

Of course, on the backend, there are threads and processes for DB access and process execution. However, these are not explicitly exposed to your code, so you can't worry about them other than by knowing that I/O interactions e.g. with the database, or with other processes will be asynchronous from the perspective of each request since the results from those threads are returned via the event loop to your code. Compared to the Apache model, there are a lot less threads and thread overhead, since threads aren't needed for each connection; just when you absolutely positively must have something else running in parallel and even then the management is handled by Node.js.

Other than I/O calls, Node.js expects that all requests return quickly; e.g. CPU-intensive work should be split off to another process with which you can interact as with events, or by using an abstraction like WebWorkers. This (obviously) means that you can't parallelize your code without another thread in the background with which you interact via events. Basically, all objects which emit events (e.g. are instances of EventEmitter) support asynchronous evented interaction and you can interact with blocking code in this manner e.g. using files, sockets or child processes all of which are EventEmitters in Node.js. Multicore can be done using this approach; see also: node-http-proxy.

Internal implementation

Internally, node.js relies on libev to provide the event loop, which is supplemented by libeio which uses pooled threads to provide asynchronous I/O. To learn even more, have a look at the libev documentation.

So how do we do async in Node.js?

Tim Caswell describes the patterns in his excellent presentation:- First-class functions. E.g. we pass around functions as data, shuffle them around and execute them when needed.

- Function composition. Also known as having anonymous functions or closures that are executed after something happens in the evented I/O.

Comments

Quora: What server do I need to handle 1000+ users simultaneously?...

If you want to handle a lot of real-time users, then your best choice is to go with an event-based, or non-blocking driven server. Taking this route will keep slow, wasteful, and unresponsive operations from killing performance, requiring that you crea...

chrelad: Thanks for writing up this nice intro to the node.js event loop.

Adrian: Very good description of the event loop. I still have a ton more to learn but this got me on the right path. Node is insane!

Matt Freeman: [quote] The queries will run in parallel provided that the I/O library supports this (e.g. via connection pooling). [/quote]

What has db connection pooling got to do with parallel query execution???

Mikito Takada: Well, it really depends on the case you're thinking about, but what I'm trying to say is that the underlying limitations of the I/O library determine the manner in which stuff gets executed. If you only have a single connection to the DB such as MySQL, then you'd only be able to launch one query at a time.

E.g. "The MySQL Client-Server Protocol has no build-in support for asynchronous query execution. The state transition is always SEND - RECEIVE. After sending a query you always have to fetch its result in the very next step." (http://blog.ulf-wendel.de/?p=170)

You can probably find counterexamples to this, however - notably the Node core modules.

Willy Wiggler: I don't get this at all - you claim that node.js is better because it doesn't spawn off a bunch of threads and/or processes to deal with synchronous processing, but then you claim that the node runtime spawns off threads & processes for DB access, etc...

I'm very skeptical that node.js would scale better than a more traditional thread-per-request model. The reality is that the node runtime will have to have some number of threads running to do the async IO. I fail to see how this is beneficial over having a thread pool to consume incoming requests.

I'd love to see some benchmarks that compare node.js implementations against other stacks.

Mikito Takada: Well, Node is not a silver bullet - but the thread-per-request model is pretty heavy due to overhead from processes.

And while some parts of Node will still use threads, it's not one process for each request but rather based the actual work you need to do. Also, Node defaults to using asynchronous APIs where available (which is pretty much in all the core APIs now). See http://blog.zorinaq.com/?e=34

Take epoll, for example, which offers async filesystem I/O and is the secret sauce behind Nginx and libev on Linux.

Here is an illustration of the overhead with epoll (nginx) vs. non-epoll (Apache).

http://blog.webfaction.com/a-little-holiday-present

Tons of other benchmarks via Google, take them all with a grain of salt!

Shawn Adrian: Great intro to this, answered some of my questions. I'd love to see some basic code examples added to illustrate your points though, would help a bunch! Thanks for writing.

Jared: This was a great article, thanks for taking the time to illustrate the brilliance behind "event loop" based systems. The one thing I wish people would point out is there is still a way to use node.js to handle heavy computation. While programing for the client side we all realized that setTimeout could be used on heavy a loop statement to maintain responsiveness. e.g.

instead of: for (var x = 0 ; x < 40000000; x++){ //do something crazy. }

var later = function(x, callback){ if (x<40000000){ //do something crazy. later(x+1, callback); } else {callback();} };

later(0, function(){alert("done");});

So lets not be scared to do something computationally expensive. Lets just be smart with how we write it. On the client side we learned that, so why is it so hard to learn it for the server side.

I really enjoyed your article. Thanks

Battur: In your example code you did not use setTimeout to maintain responsiveness. Recursively calling your later function many times does not bring anything good in terms of responsiveness. On top of that each invocation of later function will create a number of new objects related to new execution context, which will eat the memory quickly until it calls the passed in callback function and gets garbage collected.

Mikito Takada: Agreed - you'll run out of stack space.

I'd say that when the task is "do something computationally intensive fast" on the server side, the answer is not "let's use a coding trick that makes the runtime slightly more responsive" e.g. setTimeout or process.nextTick().

Node is fast for a dynamic language, but the event loop is not intended to make computationally intensive tasks responsive, it's meant to cut the resource waste related to waiting for I/O. If you saturate CPU, the solution is to find a more efficient algorithm, add caching and/or distribute the work as background tasks over several boxes.

Beo: Thanks for this explanation, you made it very clear.

Punit Pandey: Best explanation of Node.js benefits so far. Well done.

Jeff: Where did you get your I/O metrics at the beginning of the article? I'd like to reference them in an upcoming presentation if you don't mind. Thanks!

Aaron Hardy: Very well-written. Thank you.

Darío: Thank you very much for your article, it's just brilliant! By the way, loved your phrase "When I read what I write I learn what I think"!! Cheers

C2D: amazing post. Thank you.

Vijiay Rawat: Very well written. Helped me a lot.

cantbecool: Thanks for the introduction to Node's event loop.

D: What confuses me about node.js is that I don't get what you really gain going from threads to async calls. In the end, you only have one CPU and only one process or thread can run code through it at a time. Of course you could have multi cores but threads can take advantage of that just as well as multiple processes. So what are you gaining?

And yes, multi-threaded issues like race conditions are a nightmare, but it's not valid to just say "use multiple separate processes instead". Race conditions occur because your code has to have data shared across all threads. That's not something specific to threading, it's specific to the application you're writing. If you have shared data under multiple processes, you will have to implement inter-process communication, which is all a nightmare in its own right, and doesn't alleviate race conditions anyway.

And thirdly, if you only have one database running on a single box, how is this going to save you any time? Sure the web server will hand off the requests to the database server or file system very quickly but the database server has to serialize those requests to the disk to retrieve the data (ignoring caching that might occur at the database level). So you really, you are only as parallelizable as your database server or file system. Of course, that's the case with threads as well, but again, the point is that I don't see what asynch non-blocking IO gets you over threads.

Mikito Takada: It's definitely not a silver bullet, but I find that with Node's evented async model, you get to write something that works quickly on a single process; since there is only one thread of execution, you gain simplicity. When it comes time to scale you skip thinking about scaling to multiple cores on a single machine and go directly to thinking about scaling to multiple machines. It's less efficient since instead of IPC you use sockets or an external service like Redis for a single machine, but I feel like the ability to scale by adding machines is more important. In my view, Node is about taking a simple idea (single-process event loops), and seeing how far one can go with it.

Mathias: I'm having bit of trouble understanding this. Would node.js we useable for a blog were you have say 500 visitors a day? Or does the visitors have to wait in line to make requests and get a response.

Or another example: Would node.js work good for a proxy server doing api request? Can the server send a request, then continuing with 10 other requests, Right after the 5th request has started it gets a response for the first request, returns that to the user asking for it, then continuing with the 6th and so on?

Is this relevant at all? Just trying to understand.

Mathias: Got my email wrong, wheres the edit button?

Ed: Some of this may be misleading to folks, although its a great article. There is an assumption in the blog that "everything else runs in parallel." And that is simply not true. Libeio and libev, are themselve event loops which use epoll or some other AIO system to block until IO is ready.

I think the main issue is that folks get confused about IO. But IO - to Node - is anything that involves a system call for IO: sockets, disk, etc. This is not database queries such as MySQL or anything else requiring user-mode processing. IO is typically not CPU bound... so this model works well.

You will see some threads if you run node in gdb, but just a couple. I am now running on node 0.7 and my understanding is there will be a worker thread pool eventually...

Mathias asks a question above... Yes, it could be used by 100k people a day, so long as you process stuff fast - otherwise you will need to go spawn your own thread using some native module. This is why node despite the hype, does not really scale well for CPU-bound work, but scales very well for IO-bound. Node does not take advantage of multi-core & multi-cpu boxes well. But, there is nothing wrong with running a few node's at once on a box (given you figure out all the port redirect, etc)

Dan: Except modern webservers are not thread-per-request, many use threadpools. Usually, you have about 2x as many threads in your worker pool as cores. There is a acceptor thread that accepts incoming connections, and dispatches the request to threads in the worker pool. If there is a request that might take a bit of time to process, it doesn't prevent the other worker threads, or acceptor thread from doing their jobs. This architecture allows for utilizing all the available cores, without spawning tons of threads.

Seriously, Apache is slow. Tomcat as of 4-5 years ago, a JVM based server, was beating it on many benchmarks.

Michael Keisu: Thank you so much, this cleared up so much of my confusion.

Jayesh Mori: This is really helping. I am new to Node.JS and this helped understand what is going on behind the scene.

Ed: Replying to another Ed...

Ed: apologies...

Ed,

Call a spade for what it is. node.js is anticipation of having more devs deploying code onto the cloud in a shorter fashion.

What would come about when everyone realized that libraries used to get access to these slow I/O services relied upon threads? Would they spawn threads or processes to support it?

I'm going to start hacking on a threaded version of node.js

cheers,

ed

Jorge: For the "everything runs in parallel except your code" problem, there's the threads_a_gogo solution:

Jorge: https://github.com/xk/node-threads-a-gogo

Alex: What about INSERT DELAYED? That is non blocking

pligg.com: Understanding the node.js event loop...

So the largest waste with current programming technologies comes from waiting for I/O to complete. There are several ways in which one can deal with the performance impact......