Performance benchmarking the node.js backend of our 48h product, WeHearVoices.net

I decided to spend some time looking into improving the performance of the node.js backend of our 48h hackathon product We Hear Voices - a dead-simple but slick feedback tool for websites and web apps (go get it for your site ;).

Anyway, I didn't do any performance optimization during Garage48 since we were adding functionality rather than worrying about performance; but as you can see at the end of this post the backend is now optimized for much greater performance.

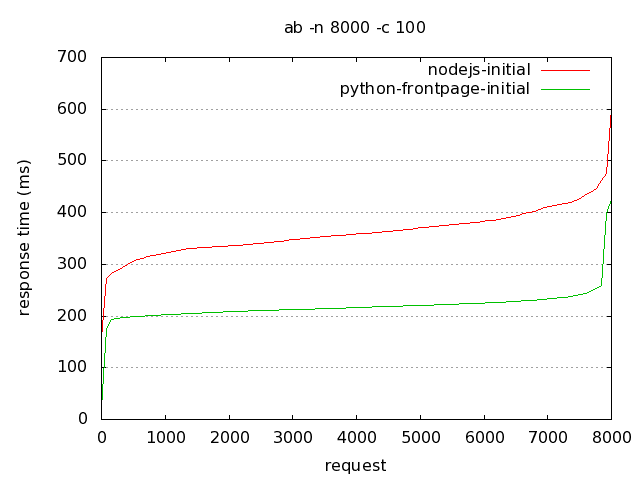

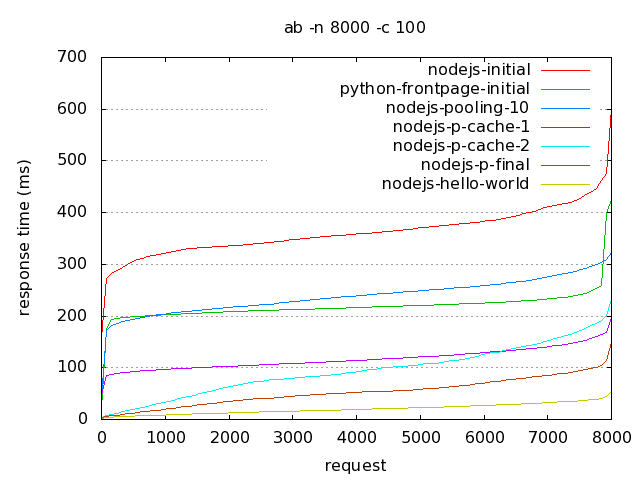

Here are my initial benchmarks, node.js vs Django:

The unoptimized implementation for the backend is hitting the database on every request and creating new MySQL client connections on each load; it does not use any caching. Given this, the performance fo node is almost 190 requests per slower than Django:

Node.js requests per second: 275.41 [#/sec] (mean)

Python (front page of site) requests per second: 454.49 [#/sec] (mean)

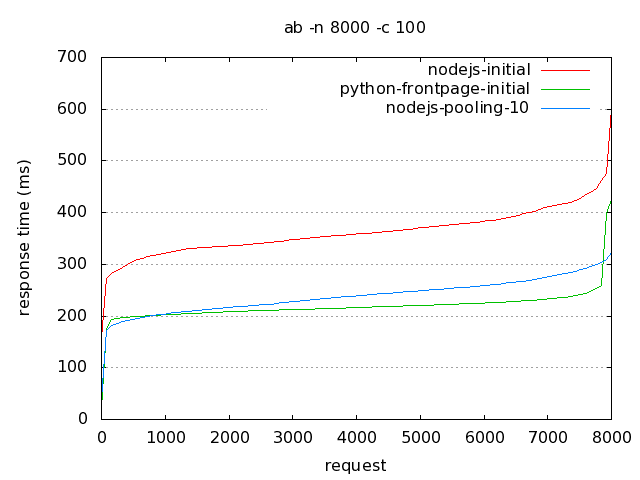

I decided to tackle the client connections first by implementing MySQL client pooling. Instead of creating new MySQL connections, the server uses a pool of 10 MySQL connections to perform queries.

The resulting performance takes Node.js to a similar level of performance as Django. Note that those queries are still there, loaded each time and we have only eliminated the latency from connecting to MySQL.

Node.js requests per second: 417.23 [#/sec] (mean)

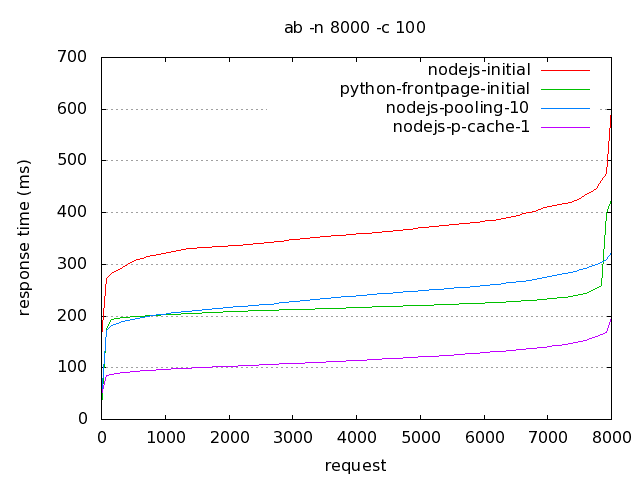

Next, I implemented caching for questions, with a cache lifetime of 30 minutes:

Now node.js is twice as fast as Django. Note that in this naive caching, the result is not expired immediately if the user changes the questions. For that, we need to expose a way for the frontend to tell the backend that the user updated the question - which is rather simple to implement (later).

Node.js requests per second: 848.21 [#/sec] (mean)

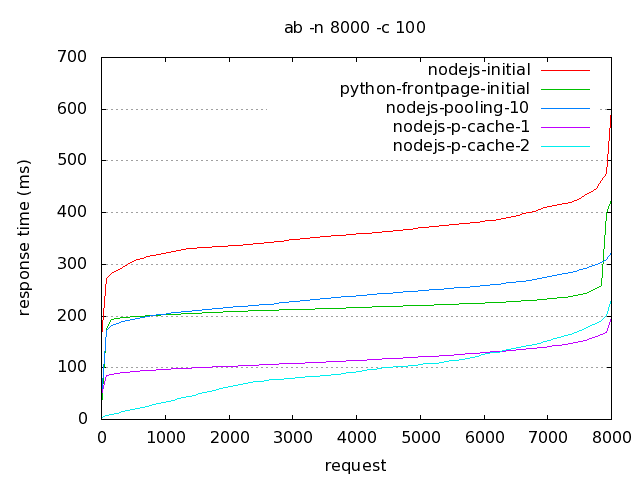

I added some more improvements:

This improves the performance by a further 300 requests per second. There is some degradation at the very slowest requests, but most requests are faster (98% complete in less than 192 ms). I still need to look into the performance degradation, although the requests per second tended to remain rather constant even when I tested it with 20 000 requests at concurrency of 1000 simultaneous requests.

Node.js requests per second: 1135.72 [#/sec] (mean)

Then I refactored the code a bit more, and added request-level caching (which runs the minimum amount of logic while taking into account the necessity of getting and setting per-user cookies and per-referrer questions).

Node.js requests per second: 1879.86 [#/sec] (mean)

Time per request: 53.196 [ms] (mean)

Time per request: 0.532 [ms] (mean, across all concurrent requests)

The very lowest curve, nodejs-hello-world is the performance of a node.js server which simply returns Hello World. We aren't quite at that level since we need to some routing and cookie setting/getting.

There are a few special cases where performance is somewhere between nodejs-p-cache-2 (notably if you have two identical URLs mapped to different questions) and nodejs-p-final; but even those get cached after a couple of questions.

However, I am satisfied that any of our early users will get good performance out of the service. Testing the most recent code with 1000 concurrent users gives similar performance (50-60 ms) with a couple of outliers which take longer.

What this means that even if you send us a continuous stream of traffic where 1800 users load a page each second, we can still cope with it :) (with some caveats, so maybe 1200+ requests per second would be more appropriate). I think the next problem is to get that traffic...

Comments

Ryan Dahl: What version of Node is this? What mysql library are you using and which version of it?

Mikito Takada: Node v.0.3.4 with felixge's node-mysql v0.9.0 on a Linode 512.

And if I may, let me do my best impression of a fan girl: OMG OMG OMG! It's Ryan Dahl! You're you! Huge fan.

Anthony Webb: What did you use for a connection pool with mysql? Also interested in how you were doing the caching.

David: What are you running Django on? If you're just running the built in Django server I would absolutely expect it to be slower... The built in server is purely for development and mostly unoptimized given that Django is a framework and not a server. If you were running Django under nginx or maybe something like gunicorn you may find it runs significantly faster, assuming you were running the django dev server. Incidentally, that would actually make it a fair test as django makes no claims that its built in server is production capable, stating quite the opposite in the docs.

Bart Czernicki: Stupid question...but the graph(s) you show are highly skewed...can't u also implement caching and pooling in Django?? (rhetorical question).

It would have been nice to see those performance improvements applied to BOTH frameworks/products. Its like me saying .NET is better than Java because I can do async programming/Generics/use advanced data structures etc. (examples both languages have).

Mikito Takada: To put this in context: Node was a lot newer a year ago and the point of the article was just to see what kind of performance we could get out of Node with a few optimizations. The Django curve is there just to provide some context; it was running behind nginx if my memory serves me right.